xpipeline an explanation¶

Introduction¶

Xpipeline is an unmodelled Gravitational Wave Burst analysis algorithm aimed at using coherent detection statistics as well as coherent conistency checks to eliminate surperfluous noise transients and extact real gravitational wave events from the data.

In order to build the statistics necessary to claim a detection and to calculate the coherent statistics needed to eliminate excess back ground noise, xpipeline conists of performing the same statistical “trials” over stretches of data that couldhave a gravitational wave event and data which it is either not believed or not possible for it to contain a graviational wave event. In addition to these two stretches of data, an analysis is also performed on a variety of simulated signals which is injected into the data that is believed to contain a graviational wave in order that we may understand what to expect from an actual gravitational wave if it were to appear in the data during the stretch fo time believed to contain and gravitational wave.

Below we highlight what one of these “statistical trials” look like (either for the time that does or does not contain a graviational wave). First, we use time from a known graviational wave event to hightlight what the trial would look like for it, and then second we use a known noise transient to see what it would look like for it.

In this breakdown, we imagine we are running over a stretch of data due to an alert from a electromagnetic countrpart, i.e. an event that is believed to create photometric and graviational wave signatures.

Through the alert, there is some timestamp considered as an “event time”, and around said event time is some stretch of probable time that could contain the graviational wave counterpart to the observation.

The data corresponding to the appropriate stretch of plausible time when the gravitational wave could have passed through earth is obtained for N detectors that were operating during that time.

The data from these N detectors are then FFT’ed and taken from time-amplitude into time-frequency space. Since we have a plausible sky location of the source, not only can we shrink the amount of data we search for a graviational wave over, we can combine the data streams from the N detectors based on the known travel time of graviational wave (i.e. the speed of light).

The tfmaps of N-1 detectors are time/phase shifted appropriately with the sky location (locations if there is some error box).

From these individual maps loud time-frequency pixels are identified and a “coherent” set of pixels is calculated from the overlap of these individually loud pixels.

After these coherent (overlapping) pixels are calculted a chosen “clustering” algorithm is applied to the pizels that group near by loud pixels into possible graviational wave candidate events.

So essentially, our possible grviational wave events are simply a cluster of loud time frequency pixels upon which numerous fancy statistics will be applied (these statistics often are called coherent statistics as they are most useful when N streams of data can be projected combined together).

Specificlly, these clusters then are assigned a variety of likelihoods these likelihoods for each cluster are based on the concept of translating the data in the dominant polarization frame i.e. plus and cross polarized time frequency maps instead of the standard time frequency maps considered above.

So the final important stat calculated is energy_of_cluster * likelihood_of_cluster for a given likelihood.

These likelihood rely largely on the ability to project the N stream of gravitational wave data in the relative antenna response pattern weighted time frequency space (so fplus fcross, fscalar, etc)

In order to calculate these antenna weighted tfmaps we need to re-weight the ASDs of the data streams appropriately. This re-weighted ASD is then multiplied with the time frequency map obtained normally above.

So let us see what the above looks like if we had had an counterpart signal to the very first graviational wave detection.

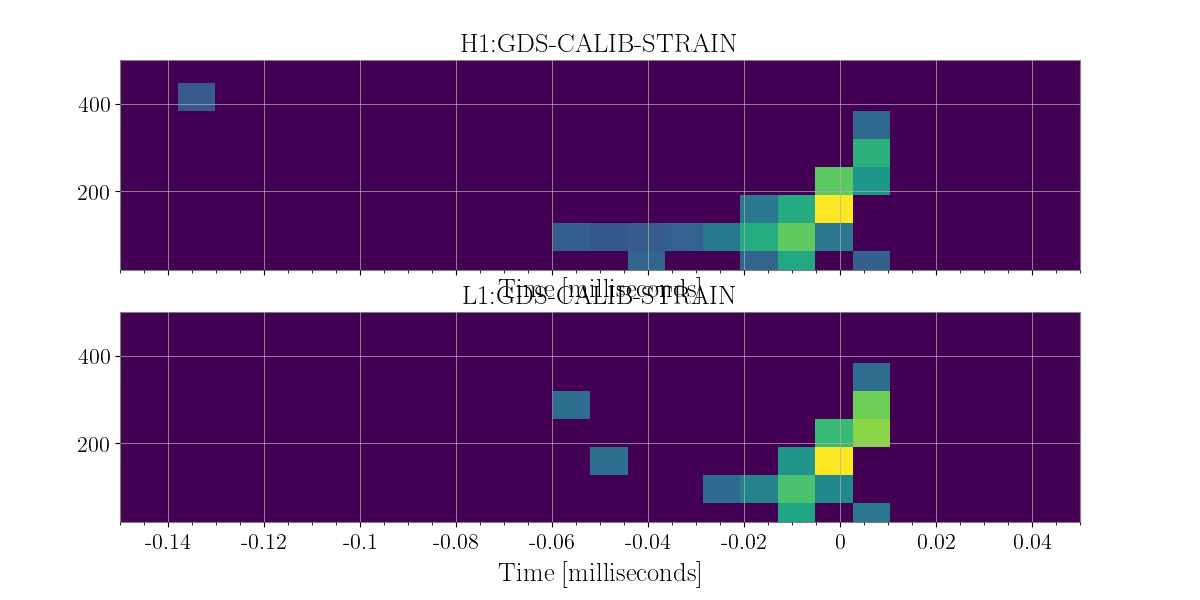

The Time-Frequency Map¶

The following is all open data obtained via LOSC

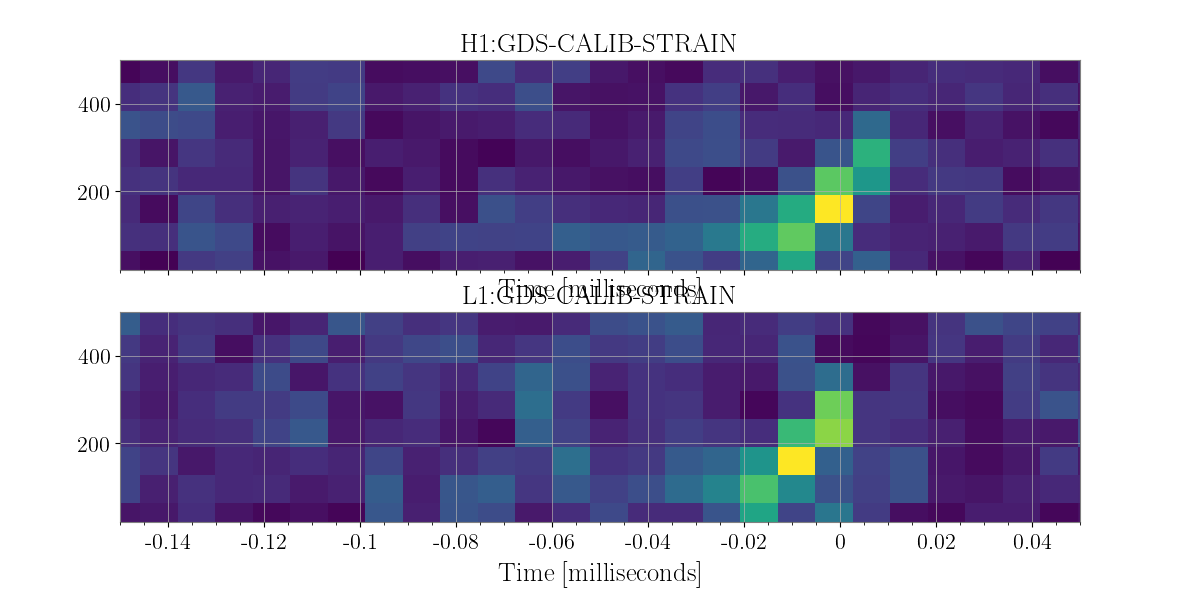

Data from Hanford and Livingston is obtained, and then whitened (one can think of this as a normalization process). The realty is that our detectors are more or less senstive to different frequencies and therefore in order to detect excess noise we must first “whiten that data.”

As I know the event time I have zoomed in close on the over all time frequnecy map so that the signal is quite clear even without any fancy statistics. Nonetheless, let us go through the whole process.

In [1]: from xpipeline.core.xtimeseries import XTimeSeries

In [2]: data = XTimeSeries.read('examples/GW150914.gwf', channels=['H1:GDS-CALIB_STRAIN','L1:GDS-CALIB_STRAIN'])

In [3]: asds = data.asd(1.0)

In [4]: whitened_timeseries = data.whiten(asds)

In [5]: fft_maps = whitened_timeseries.fftgram(1. /64)

In [6]: energy_maps = fft_maps.abs()

In [7]: gps = 1126259462.427

In [8]: plot = energy_maps.plot(figsize=[ 12, 6])

In [9]: for ax in plot.axes:

...: ax.set_xlim(gps - 0.15, gps + 0.05)

...: ax.set_epoch(gps)

...: ax.set_xlabel('Time [milliseconds]')

...: ax.set_ylim(20, 500)

...:

In [10]: plot

Out[10]: <Plot size 1200x600 with 2 Axes>

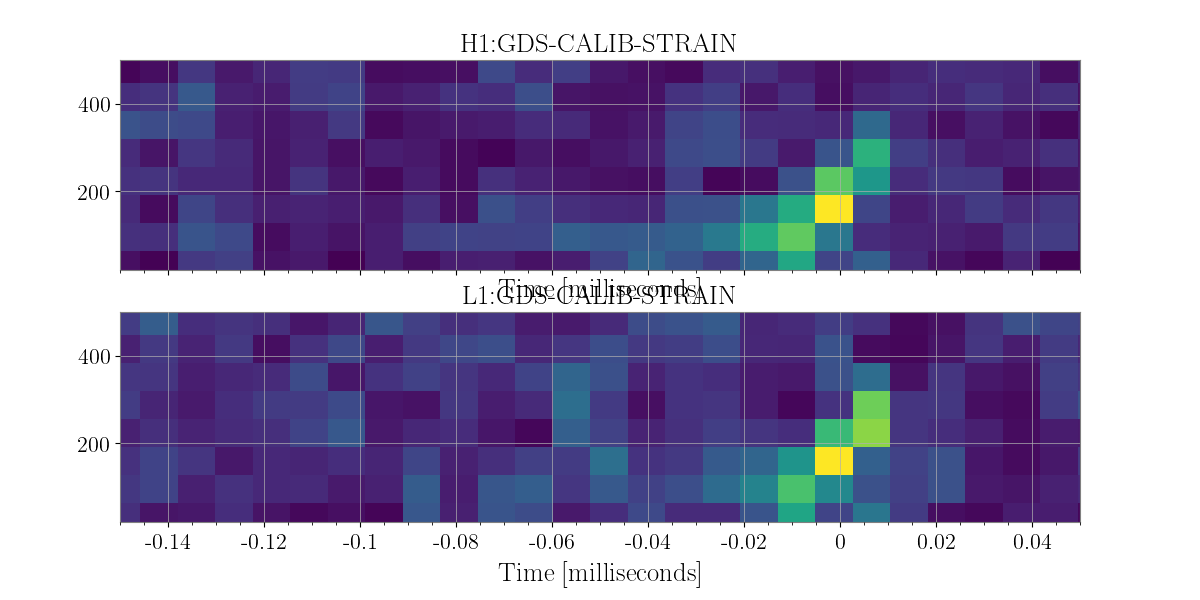

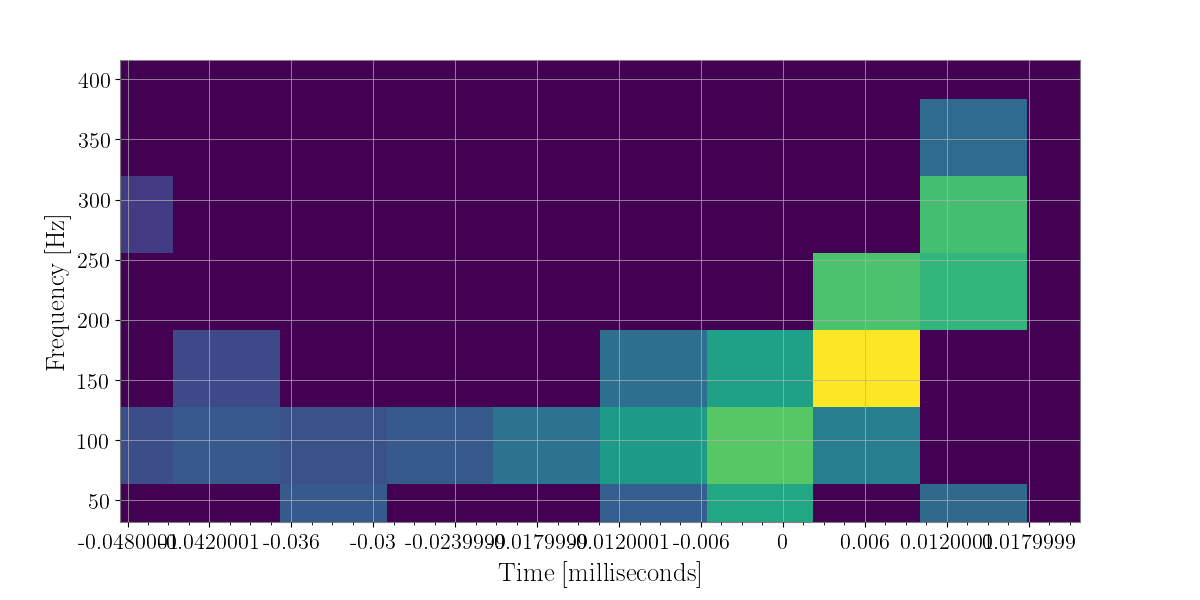

The Coherent Time-Frequency Map¶

In the above example you may notice that the data streams from both detectors do not seem to be in sync. Well this is because we have not utilized the most important concept in this unmodeled gravitational wave search analysis, the coherent combining of data streams, based upon an a sky location value known ahead of time.

In this example we use a sky location chosen from the sky map assocaited with GW150914 to illustrate what a coherent anlaysis might look like if say you had a Gamma-Ray-Burst or Supernova counterpart you were following up.

The way we can accomplish this is by either physically shifting the data of N-1 detectors relative to a baseline detector some delta T amount or we can phase shift the pixels of the timefrequencymap, here we physically shift the livingston data.

In [11]: from xpipeline.core.xdetector import Detector; import numpy

In [12]: hanford = Detector('H1')

In [13]: livingston = Detector('L1')

In [14]: phi = -0.3801; theta = 2.7477 # Earth fixed coordinates

In [15]: time_shift = numpy.round((livingston.time_delay_from_earth_center_phi_theta([phi], [theta]) - hanford.time_delay_from_earth_center_phi_theta([phi], [theta]))*data['H1:GDS-CALIB_STRAIN'].sample_rate)

In [16]: whitened_timeseries['L1:GDS-CALIB_STRAIN'] = numpy.roll(whitened_timeseries['L1:GDS-CALIB_STRAIN'], -time_shift.astype(int))

In [17]: fft_grams = whitened_timeseries.fftgram(1. /64)

In [18]: energy_maps = fft_grams.abs()

In [19]: plot = energy_maps.plot(figsize=[ 12, 6])

In [20]: for ax in plot.axes:

....: ax.set_xlim(gps - 0.15, gps + 0.05)

....: ax.set_epoch(gps)

....: ax.set_xlabel('Time [milliseconds]')

....: ax.set_ylim(20, 500)

....:

In [21]: plot

Out[21]: <Plot size 1200x600 with 2 Axes>

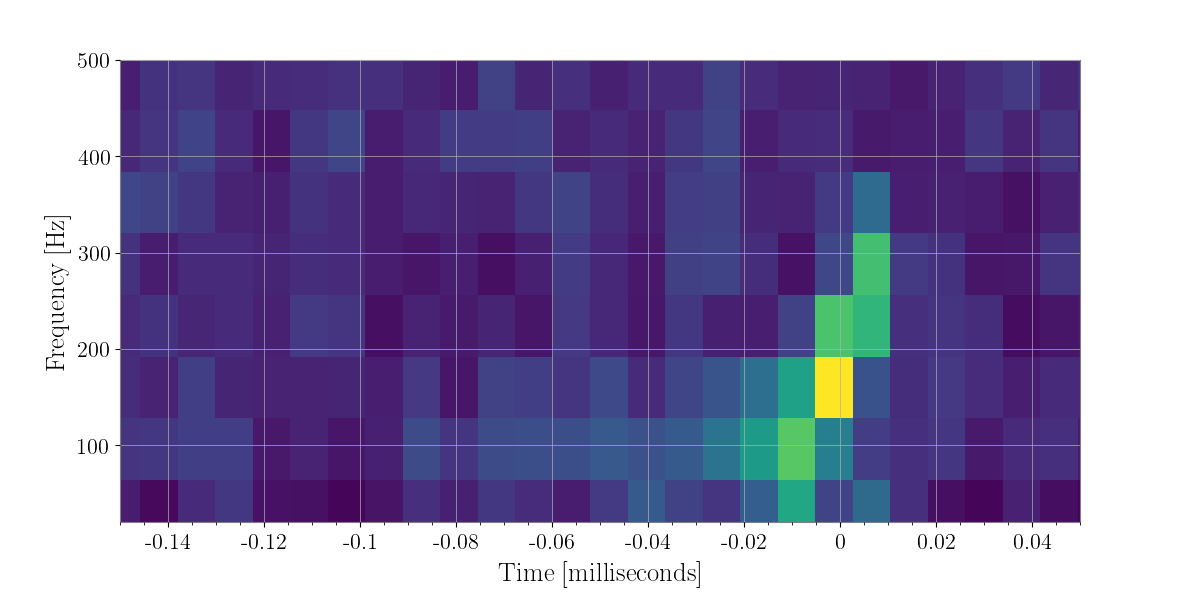

In [22]: coh_energy_maps = energy_maps.to_coherent()

In [23]: plot = coh_energy_maps.plot(figsize=[ 12, 6])

In [24]: for ax in plot.axes:

....: ax.set_xlim(gps - 0.15, gps + 0.05)

....: ax.set_epoch(gps)

....: ax.set_xlabel('Time [milliseconds]')

....: ax.set_ylim(20, 500)

....:

In [25]: plot

Out[25]: <Plot size 1200x600 with 1 Axes>

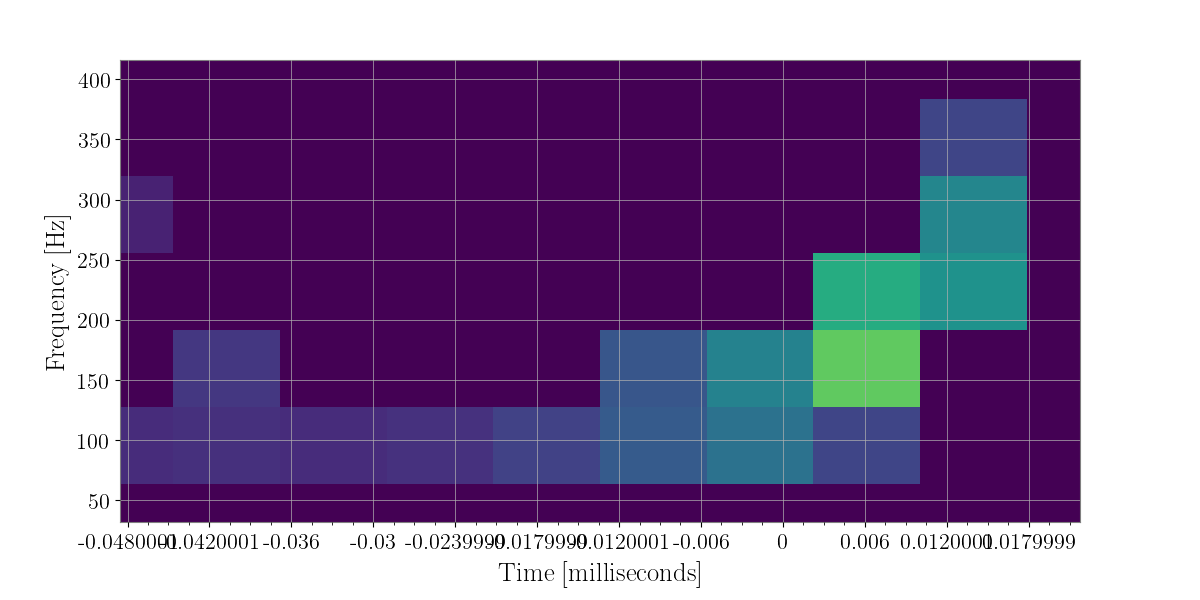

Clustering Pixels¶

There are a few ways to speed up the processing of the map. Many of the pixels are not going to be significant, so we can threhold on what pixels we want (say the loudest 1 percent of pixels) and then employ a method to group the pixels together in what are referred to as clusters. These clusters become our possible gravitational wave triggers on which we will later perform statistics on to determine whether they originate from gravitational wave source or not.

In order to retain the visual key of a time frequency map pixels being grouped and added together, but also perform algorithmic opertaions quickly we utilize a subclass of the scipy.sparse.csc_matrix class which is designed to efficiently perform operation on 2D matrices that are mostly zeroes (which is what happens when we set 99 percent of the pixels to zero).

The algorithm employed to label the remaining pixels in our map into groups is a nearest neighbor cpp wrapped algorithm called fastlabel.

In [26]: energy_map_zeroed = energy_maps.blackout_pixels(99)

In [27]: plot = energy_map_zeroed.plot(figsize=[ 12, 6])

In [28]: for ax in plot.axes:

....: ax.set_xlim(gps - 0.15, gps + 0.05)

....: ax.set_epoch(gps)

....: ax.set_xlabel('Time [milliseconds]')

....: ax.set_ylim(20, 500)

....:

In [29]: plot

Out[29]: <Plot size 1200x600 with 2 Axes>

In [30]: coh_map = energy_map_zeroed.to_coherent()

In [31]: tf_indices = coh_map.nonzero() # find the union of pixels from the sparse in coherent maps

In [32]: tindex = {k : tf_indices[0]

....: for k in energy_maps}

....:

In [33]: findex = {k : tf_indices[1]

....: for k in energy_maps}

....:

In [34]: energy_map_zeroed = energy_maps.to_sparse(tindex, findex)

In [35]: clusters = energy_map_zeroed.cluster() # cluster over the coherent pixels

In [36]: print(clusters)

min_time_of_cluster weighted_center_time max_time_of_cluster min_frequency_of_cluster ... number_of_pixels energy_of_cluster dt df

0 1.126259e+09 1.126259e+09 1.126259e+09 32.0 ... 20.0 4.091381 0.070312 384.0

1 1.126260e+09 1.126260e+09 1.126260e+09 288.0 ... 6.0 0.600904 0.015625 320.0

2 1.126259e+09 1.126259e+09 1.126259e+09 352.0 ... 6.0 0.596569 0.023438 256.0

3 1.126260e+09 1.126260e+09 1.126260e+09 416.0 ... 5.0 0.588640 0.015625 192.0

4 1.126260e+09 1.126260e+09 1.126260e+09 416.0 ... 6.0 0.575486 0.023438 192.0

5 1.126260e+09 1.126260e+09 1.126260e+09 288.0 ... 5.0 0.525573 0.015625 320.0

6 1.126259e+09 1.126259e+09 1.126259e+09 160.0 ... 5.0 0.514066 0.015625 320.0

7 1.126259e+09 1.126259e+09 1.126259e+09 352.0 ... 4.0 0.511123 0.007812 256.0

8 1.126260e+09 1.126260e+09 1.126260e+09 160.0 ... 5.0 0.504162 0.031250 192.0

9 1.126259e+09 1.126259e+09 1.126259e+09 96.0 ... 5.0 0.498445 0.015625 192.0

10 1.126259e+09 1.126259e+09 1.126259e+09 32.0 ... 5.0 0.483718 0.015625 256.0

11 1.126259e+09 1.126259e+09 1.126259e+09 416.0 ... 5.0 0.476363 0.023438 192.0

12 1.126260e+09 1.126260e+09 1.126260e+09 288.0 ... 4.0 0.475867 0.015625 256.0

13 1.126259e+09 1.126259e+09 1.126259e+09 224.0 ... 5.0 0.473756 0.007812 320.0

14 1.126259e+09 1.126259e+09 1.126259e+09 416.0 ... 4.0 0.471334 0.015625 192.0

15 1.126259e+09 1.126259e+09 1.126259e+09 416.0 ... 4.0 0.469871 0.015625 192.0

16 1.126259e+09 1.126259e+09 1.126259e+09 288.0 ... 5.0 0.444777 0.023438 192.0

17 1.126259e+09 1.126259e+09 1.126259e+09 480.0 ... 4.0 0.438836 0.015625 128.0

18 1.126260e+09 1.126260e+09 1.126260e+09 480.0 ... 4.0 0.437304 0.015625 128.0

19 1.126259e+09 1.126259e+09 1.126259e+09 416.0 ... 4.0 0.436201 0.015625 192.0

20 1.126260e+09 1.126260e+09 1.126260e+09 160.0 ... 4.0 0.434081 0.023438 192.0

21 1.126259e+09 1.126259e+09 1.126259e+09 224.0 ... 4.0 0.432303 0.015625 192.0

22 1.126259e+09 1.126259e+09 1.126259e+09 288.0 ... 4.0 0.431066 0.015625 256.0

23 1.126259e+09 1.126259e+09 1.126259e+09 480.0 ... 4.0 0.429848 0.023438 128.0

24 1.126259e+09 1.126259e+09 1.126259e+09 352.0 ... 4.0 0.427331 0.007812 256.0

25 1.126259e+09 1.126259e+09 1.126259e+09 96.0 ... 4.0 0.425198 0.015625 256.0

26 1.126259e+09 1.126259e+09 1.126259e+09 288.0 ... 4.0 0.420571 0.023438 192.0

27 1.126259e+09 1.126259e+09 1.126259e+09 96.0 ... 4.0 0.419265 0.015625 256.0

28 1.126259e+09 1.126259e+09 1.126259e+09 96.0 ... 4.0 0.419227 0.015625 192.0

29 1.126260e+09 1.126260e+09 1.126260e+09 96.0 ... 4.0 0.419072 0.015625 256.0

.. ... ... ... ... ... ... ... ... ...

410 1.126260e+09 1.126260e+09 1.126260e+09 288.0 ... 2.0 0.224290 0.007812 128.0

411 1.126259e+09 1.126259e+09 1.126259e+09 288.0 ... 2.0 0.224192 0.007812 128.0

412 1.126260e+09 1.126260e+09 1.126260e+09 160.0 ... 2.0 0.224183 0.007812 128.0

413 1.126259e+09 1.126259e+09 1.126259e+09 416.0 ... 2.0 0.224139 0.015625 64.0

414 1.126259e+09 1.126259e+09 1.126259e+09 224.0 ... 2.0 0.223967 0.007812 128.0

415 1.126260e+09 1.126260e+09 1.126260e+09 160.0 ... 2.0 0.223742 0.007812 128.0

416 1.126260e+09 1.126260e+09 1.126260e+09 416.0 ... 2.0 0.223625 0.007812 128.0

417 1.126260e+09 1.126260e+09 1.126260e+09 480.0 ... 2.0 0.223332 0.007812 128.0

418 1.126259e+09 1.126259e+09 1.126259e+09 224.0 ... 2.0 0.223289 0.015625 128.0

419 1.126260e+09 1.126260e+09 1.126260e+09 480.0 ... 2.0 0.223233 0.007812 128.0

420 1.126260e+09 1.126260e+09 1.126260e+09 96.0 ... 2.0 0.223163 0.015625 128.0

421 1.126259e+09 1.126259e+09 1.126259e+09 224.0 ... 2.0 0.223101 0.007812 128.0

422 1.126259e+09 1.126259e+09 1.126259e+09 480.0 ... 2.0 0.223035 0.007812 128.0

423 1.126259e+09 1.126259e+09 1.126259e+09 416.0 ... 2.0 0.222940 0.007812 128.0

424 1.126259e+09 1.126259e+09 1.126259e+09 224.0 ... 2.0 0.222831 0.015625 64.0

425 1.126260e+09 1.126260e+09 1.126260e+09 224.0 ... 2.0 0.222340 0.015625 64.0

426 1.126259e+09 1.126259e+09 1.126259e+09 288.0 ... 2.0 0.222337 0.007812 128.0

427 1.126259e+09 1.126259e+09 1.126259e+09 352.0 ... 2.0 0.221887 0.007812 128.0

428 1.126260e+09 1.126260e+09 1.126260e+09 480.0 ... 2.0 0.221638 0.007812 128.0

429 1.126260e+09 1.126260e+09 1.126260e+09 480.0 ... 2.0 0.221473 0.007812 128.0

430 1.126259e+09 1.126259e+09 1.126259e+09 480.0 ... 2.0 0.221261 0.007812 128.0

431 1.126259e+09 1.126259e+09 1.126259e+09 160.0 ... 2.0 0.221158 0.007812 128.0

432 1.126260e+09 1.126260e+09 1.126260e+09 480.0 ... 2.0 0.221042 0.007812 128.0

433 1.126260e+09 1.126260e+09 1.126260e+09 480.0 ... 2.0 0.221036 0.007812 128.0

434 1.126259e+09 1.126259e+09 1.126259e+09 480.0 ... 2.0 0.221036 0.007812 128.0

435 1.126260e+09 1.126260e+09 1.126260e+09 416.0 ... 2.0 0.220916 0.007812 128.0

436 1.126259e+09 1.126259e+09 1.126259e+09 288.0 ... 2.0 0.220749 0.007812 128.0

437 1.126259e+09 1.126259e+09 1.126259e+09 352.0 ... 2.0 0.220685 0.007812 128.0

438 1.126260e+09 1.126260e+09 1.126260e+09 352.0 ... 2.0 0.220512 0.007812 128.0

439 1.126260e+09 1.126260e+09 1.126260e+09 96.0 ... 2.0 0.220464 0.007812 128.0

[440 rows x 10 columns]

Now the we have labelled our remaining pixels (the non-zeroed out pixels), let’s extract some of the cluster properites of these clusters. i.e. how many pixels are in the cluster the bounding box of the cluster (i.e. [[min-time, max-time], [min-freq, max-freq]] and the sum of energy over the cluster.

Specifically, the cpp wrapped function clusterproperities outputs the following information

column 0: minimum time of cluster

column 1: weighted center time of cluster

column 2: maximum time of cluster

column 3: minimum frequency of cluster

column 4: weighted center frequency of cluster

column 5: maximum frequency of cluster

column 6: number of pixels in cluster

column 7: sum-over-cluster map values for each likelihood

Before we do this though, we must re-make our sparse maps. for we have zeroed out some pixels in either map that are now part of our clusters. i.e. some pixels may have been in the top 1 percent of one but not all maps.

In [37]: min_time = clusters['min_time_of_cluster'].iloc[0]; max_time = clusters['max_time_of_cluster'].iloc[0]; weighted_center_time = clusters['weighted_center_time'].iloc[0]; min_freq = clusters['min_frequency_of_cluster'].iloc[0]; max_freq = clusters['max_frequency_of_cluster'].iloc[0];

In [38]: plot = energy_map_zeroed.to_xtimefrequencymapdict().to_coherent().plot()

In [39]: for ax in plot.axes:

....: ax.set_xlim(min_time, max_time)

....: ax.set_epoch(weighted_center_time)

....: ax.set_xlabel('Time [milliseconds]')

....: ax.set_ylim(min_freq, max_freq)

....:

In [40]: plot

Out[40]: <Plot size 1200x600 with 1 Axes>

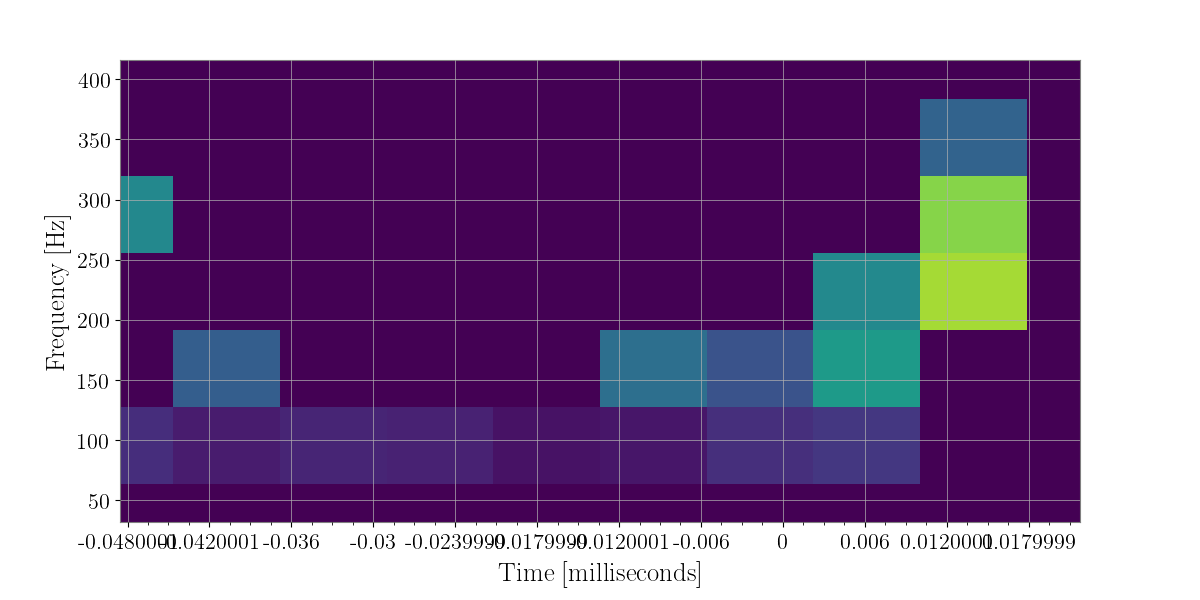

The Dominant Polarization Frame¶

Now the we have a sky location assosciated with the event we can project every time-freqeuncy pixel into the Dominant Polarization Frame (DPF). What this means is that we assume the GW has a plus and cross polarization there is some orthoganal projection of the pixels onto the plus-cross plane for 2 or more detectors

In [41]: from xpipeline.core.xdetector import compute_antenna_patterns

In [42]: phi = -0.3801; theta = 2.7477 # Earth fixed coordinates

In [43]: antenna_patterns = compute_antenna_patterns(['H1', 'L1'], phi, theta, antenna_patterns=['f_plus', 'f_cross', 'f_scalar'])

In [44]: projected_asds = asds.project_onto_antenna_patterns(antenna_patterns, to_dominant_polarization_frame=True)

In [45]: projected_fftmaps = fft_grams.to_dominant_polarization_frame(projected_asds)

Now that we have projected each pixels onto the plus and cross phase + amplitude space Let’s see what it looks like if we simply take these projections and plot them.

In [46]: sparse_projected_fftmaps = {k : v.to_sparse(tindex, findex) for k, v in projected_fftmaps.items()}

In [47]: plot = sparse_projected_fftmaps['f_plus'].to_xtimefrequencymapdict().to_coherent().abs().plot()

In [48]: for ax in plot.axes:

....: ax.set_xlim(min_time, max_time)

....: ax.set_epoch(weighted_center_time)

....: ax.set_xlabel('Time [milliseconds]')

....: ax.set_ylim(min_freq, max_freq)

....:

In [49]: plot

Out[49]: <Plot size 1200x600 with 1 Axes>

In [50]: plot = sparse_projected_fftmaps['f_cross'].to_xtimefrequencymapdict().to_coherent().abs().plot()

In [51]: for ax in plot.axes:

....: ax.set_xlim(min_time, max_time)

....: ax.set_epoch(weighted_center_time)

....: ax.set_xlabel('Time [milliseconds]')

....: ax.set_ylim(min_freq, max_freq)

....:

In [52]: plot

Out[52]: <Plot size 1200x600 with 1 Axes>

Likelihoods¶

So now that we have possible graviational wave candidates in the form of clusters of loud pixels and the projected values of those pixels, how do we “rank” these clusters in order or more or less likely to have orginiated from graviational wave origins.

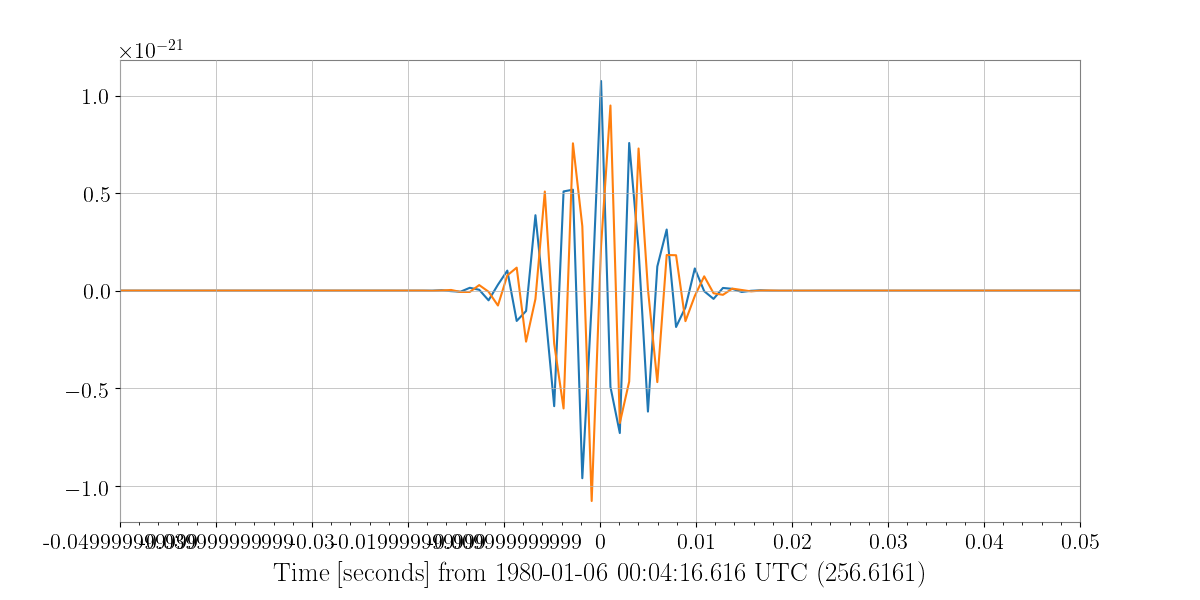

The Waveform¶

In order to train these likelihoods so we can understand what values to expect from gravitational wave clusters instead of random noise fluctations or glitches we must inject a number of fake gravitational wave like signals.

This involves to steps, generating a gravitational-wave like waveform on the fly and then injecting that signal into a stretch of data.

The parameters that go into xmakewaveform are the family of waveform, a set of parameters specific for that waveform. In this case, the hrss is the quadrature sum of the RSS amplitudes of the plus and cross polarizations, tau is the duration, f0 is the central frequency, alpha is the chirp parameter, and delta is the phase at the peak of the envelope.

In [53]: from xpipeline.waveform import xwaveform

In [54]: from gwpy.plot import Plot

In [55]: t, hp, hc, hb = xwaveform.xmakewaveform(family='chirplet', parameters=[1e-22, 0.0033, 300.0, 0, 0, 1], T=513, T0=256.6161, fs=1024)

In [56]: fig = Plot(hp, hc)

In [57]: ax = fig.gca()

In [58]: ax.set_epoch(256.6161)

In [59]: ax.set_xlim([256.6161 - 0.05, 256.6161 + 0.05])

Out[59]: (256.5661, 256.6661)

In [60]: plot

������������������������������Out[60]: <Plot size 1200x600 with 1 Axes>

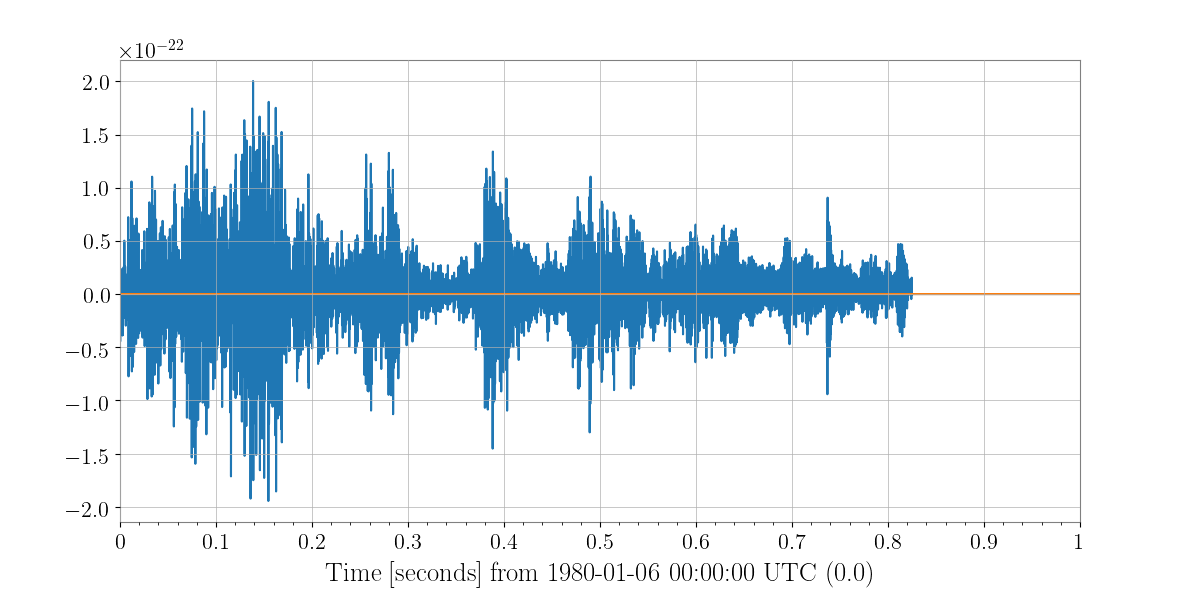

Now let’s say this is not an analytical waveform and instead an hplus and hcross from say a supernova simulation. We can also handles that, tracked by git-lfs, the waveforms folder of X-Pypeline repository houses a number of hdf5 files full of pregenerated waveforms.

In [61]: from xpipeline.waveform import xwaveform

In [62]: from gwpy.plot import Plot

In [63]: t, hp, hc, hb = xwaveform.xmakewaveform(family='Morozova2018',

....: parameters=[8, 'M10_LS220'],

....: T=1, T0=0, fs=16384, catalogdirectory='../waveforms/xpipeline-waveforms')

....:

In [64]: fig = Plot(hp, hc)

In [65]: ax = fig.gca()

In [66]: ax.set_xlim([0, 1.0])

Out[66]: (0.0, 1.0)

In [67]: plot

��������������������Out[67]: <Plot size 1200x600 with 1 Axes>

The Injection¶

In a coherent search it is not enough to simply inject any old signal. You must take in a set of sky coordinates and project an individual signal with its antenna pattern (for example Fp*hp and Fc*hc) just like we do for the data. Let us say we have the SN waveform from above now we will assume this SN signal occurred at the same earth fixed coordinates of GW150914 from above, but since this is a simulation let us imagine VIRGO was on at the time too.

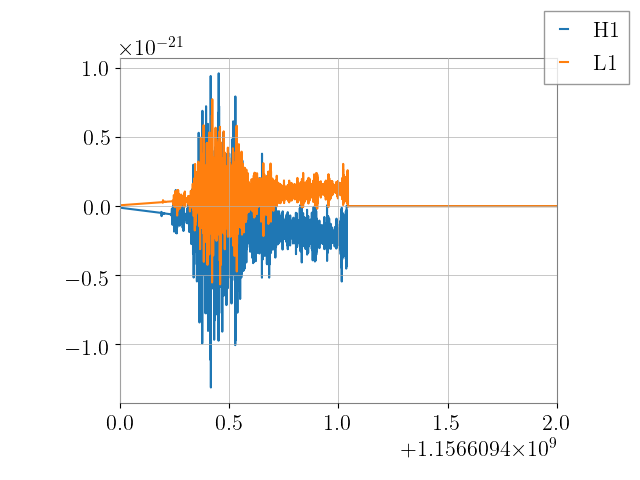

In [68]: from xpipeline.waveform import xinjectsignal

In [69]: start_time = 1156609396.0; block_time = 256; channels = ['H1', 'L1']; sample_rate = 16384; injection_file_name ='examples/injection_Morozova.txt'; injection_number=2; catalogdirectory='../waveforms/xpipeline-waveforms';

In [70]: [injection_data, gps_s, gps_ns, phi, theta, psi] = xinjectsignal.xinjectsignal_fromfile(start_time=start_time, block_time=block_time, channels=channels, injection_file_name=injection_file_name, injection_number=injection_number, sample_rate= sample_rate, catalogdirectory=catalogdirectory)

In [71]: from gwpy.plot import Plot

In [72]: fig = Plot()

In [73]: ax = fig.gca()

In [74]: for k, v in injection_data.items():

....: ax.plot(v, label=k,)

....:

In [75]: ax.set_xlim([gps_s, gps_s + 2])

Out[75]: (1156609400, 1156609402)

In [76]: fig.legend()

����������������������������������Out[76]: <matplotlib.legend.Legend at 0x10ec83cc0>

In [77]: plot

�������������������������������������������������������������������������������������Out[77]: <Plot size 1200x600 with 1 Axes>